Super AI - Coming Soon To A World Near You

October 25, 2019The emergence of artificial intelligence (AI) represents one of the most important turning points in human history and it is squarely upon us. But what comes next will make life as we know it unrecognizable and there will be no turning back. So called super artificial intelligence (super AI), which is magnitudes more intelligent than the human brain, might be achievable in our lifetimes. World renown futurist Ray Kurzweil refers to this as the moment we reach singularity. The term is borrowed from physics and refers to the point where we can’t predict outcome nor come back from it. Are we prepared for what will be a quantum change in humanity?

We are already in the age of narrow artificial intelligence. In many areas, computers have surpassed human’s abilities. And with the advances being made in machine learning, computers no longer need human programming to achieve certain narrowly defined goals - they teach themselves. A few years back, Google’s DeepMind division created a program called AlphaGo Zero to master the Chinese game Go. In case you are not familiar with the game it requires very complex strategies. The number of possible positions is 3 to the power of 361. That’s more than all atoms that exist in the known universe! Rather than using data from human games, AlphaGo Zero played against itself to become proficient. It quickly beat the world’s top player. A subsequent version surpassed the strength of its predecessor in just three days.

We are already in the age of narrow artificial intelligence. In many areas, computers have surpassed human’s abilities. And with the advances being made in machine learning, computers no longer need human programming to achieve certain narrowly defined goals - they teach themselves. A few years back, Google’s DeepMind division created a program called AlphaGo Zero to master the Chinese game Go. In case you are not familiar with the game it requires very complex strategies. The number of possible positions is 3 to the power of 361. That’s more than all atoms that exist in the known universe! Rather than using data from human games, AlphaGo Zero played against itself to become proficient. It quickly beat the world’s top player. A subsequent version surpassed the strength of its predecessor in just three days.

The time when AI can perform at the level of the human brain (artificial general intelligence or AGI) is not that far off. While no one can say definitively, Kurzweil predicts it will happen by 2029. Other experts put it somewhere between 2040-50. And still others suggest it might not happen this century.

Whatever the timeframe, you would be forgiven for scoffing at AGI as something out of a sci-fi movie. But consider the facts. Although the human brain is more complex, computers can process information a lot faster and therefore LEARN a lot faster. We tend to think of learning in a linear fashion, in step by step increments. From Moore’s Law, we already know that information processing speeds have doubled approximately every two years. Most experts don’t see that slowing down given the introduction of new materials that allow for even smaller circuit boards, not to mention the advances being made in the creation of neural networks (AI modelled after the human brain) and quantum computing. Just a few days ago we saw a stunning development by Google. One of its advanced computers achieved "quantum supremacy" for the first time, surpassing the performance of conventional devices. The technology giant's Sycamore quantum processor was able to perform a task in 200 seconds that would take the world's best supercomputers 10,000 years to complete.

These advances create exponential, not linear growth. For example, 30 linear steps gets you to 30. One, two, three, four… step 30 you're at 30. With exponential growth, it's one, two, four, eight… step 30 you're at a billion.

So, what happens once AGI is achieved? There will be an intelligence explosion which will usher in the dawn of super AI, which many suggest will be millions of times more intelligent than the human brain.

The implications of this have many experts worried as we haven’t thought through, or protected against, the potential dangers to our species. As I mentioned in my article (Consciousness) folks like Elon Musk, Bill Gates and Stephen Hawking have all sounded the alarm.

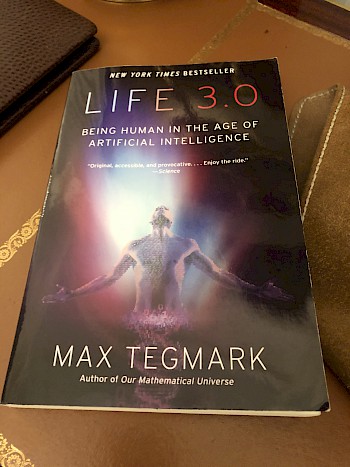

Enter MIT’s Max Tegmark. His book, Life 3.0 not only address the future of AI, but more importantly, what safety systems we need to start thinking about immediately.

In my Consciousness article, I suggested that we needed to understand what consciousness is and whether AI can achieve it and therefore potentially become an existential threat. Although Tegmark see consciousness as an issue, he makes a very good case that AI does not need to be self-aware to be a danger to humanity. As he puts it, a machine does not need to be malevolent, it simply needs to be competent at achieving its goals to be a potential threat. He uses the example of asking a self driving car to get to the airport as quickly as possible. The terrified passenger may arrive covered in vomit and being chased by police helicopters, but the car was simply achieving its goal as efficiently as possible. The problem of course is that the car’s “goals” were not aligned with our human goals. Now substitute that misunderstanding with autonomous lethal weapons, nuclear weapons, the power grid, the Internet or any other important system and you can imagine what damage can be done if safety measures are not built in.

Additionally, how do we align our values with those of an efficient AI? Tegmark uses an analogy of how humans interact with ants. If we want to build a power plant that sits over a large ant colony we will go ahead and do so – too bad for the ants. Super AI may someday look at us in the same way. No malevolence needed.

Our challenge will be to balance AI competence with human wisdom and values. It’s not an easy task in a world where companies and countries are competing in what may be “winner takes all” stakes. This challenge is even more profound given the current tension between China and the US, especially given that China leads the world in AI research. And even if we were to cooperate, how do we agree on whose values and priorities we implement?

Despite these seemingly insurmountable issues, we have little choice but try. Tegmark is helping to lead the charge. He organized a gathering of many of the leading AI researchers to discuss the issues we face. With the backing of Musk, he launched the Future of Life Institute (futureoflife.org) which works to mitigate existential risks facing humanity, particularly from advanced AI. Musk and a group of investors has also launched OpenAI with a $1 billion pledge. OpenAI’s (openai.com) mission is to ensure that artificial general intelligence benefits all of humanity.

We know narrow artificial intelligence its already dramatically changing our world through job disruption. (Read my article on Yuval Harai’s book 21 Lessons for the 21st Century) There are very few jobs that AI isn’t already capable of replacing. And I am not just talking about truck drivers, but lawyers and doctors too.

What super AI will usher in is on a completely different level. The public needs to be fully informed on the implications of this technology. The future of mankind is at stake and we need to encourage and support the kind of proactive thinking from leaders such as Musk and Tegmark.

In upcoming articles, I will look at the positive side of AI and what the future of life itself may look like.